Artificial Intelligence and privacy

Amidst the public debate on the virtues and risks of tools like the famous ChatGPT, the European Union has debated and approved the first regulations on artificial intelligence and privacy, with the aim of regulating this disruptive technology and its mass use.

Among the risks of this new technology are undoubtedly all those related to the privacy of people, since AI feeds on the processing of large amounts of data (also personal) that is obtained from many different sources, such as data published on the social media, posts or any other that may result in data processing, of which the data subject is sometimes not even aware.

AI systems are constantly fed by the information they consume and, therefore, the collection and use of this information is an inseparable part of this same system.

Artificial Intelligence and privacy: what are the risks?

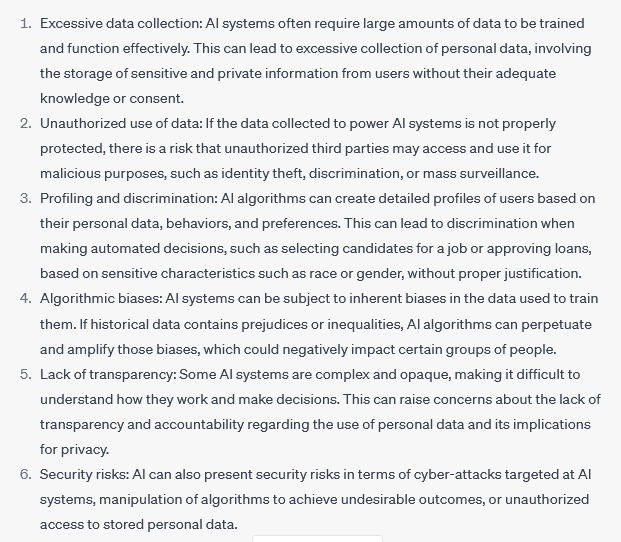

In terms of privacy, the risks, which are identified below, can be grouped into three areas:

- Security risks.

Indeed, AI systems feed on the constant and mass collection of data and information (also personal). The more data collected and analysed, the greater the risks that a potential attack on an AI system might cause further uncontrollable damage to data subjects.

- Risks due to the discrimination of people.

Unquestionably, if the system makes decisions in the data collection processes (for example in a personnel selection process) based on the analysis of the social profiles, for example, of the candidates, discriminatory situations may arise, with the problem that the candidates they may not even be aware that their data is being processed.

- Risks associated with unauthorised use.

The improvement in practices such as deep fake, which we discussed here more than 3 years ago, can mean that people are involved in undesirable situations, such as fake news, which can even have very important consequences on their lives. Obviously, the use of this type of technology involves the unauthorised use of images.

So far, in four human lines the main risks. Let’s read, therefore, what AI itself says about privacy risks:

There is no doubt that, once again, this involves revolutionary technology and foreseeable mass use, generating new privacy risks that ultimately consist of controlling who makes use of our personal information and for what purpose.

What measures does the new regulation contemplate to minimise privacy risks?

From the outset, the European Union’s AI regulations directly provide for a series of forbidden practices and, more importantly, their application is provided for both by development companies established in the EU and by non-European companies if their practices have an impact on European citizens (somewhat in line with the GDPR model).

The forbidden practices include:

- AI tools that deploy subliminal techniques beyond a person’s consciousness to distort their behaviour in a way that causes or may cause physical or psychological harm to that person or another person.

- An AI system that exploits any of the vulnerabilities of a specific group of people because of their age, physical or mental disability, to materially distort the behaviour of a person belonging to this group, in a way that causes or is likely to cause that person (or another) physical or psychological harm.

- The use of AI systems by public authorities for the assessment or classification of the trustworthiness of natural persons over a given period of time based on their social or personal behaviour.

- The use of remote biometric identification systems “in real time” in public access spaces for the application of the law, unless one of the exceptions provided is not met.

The proposal is also based on the principle of risk management. Therefore, in any project that involves the use of AI, it will be necessary to assess the impact that it may have in relation to the processing of personal data in advance, which will also involve the application of principles such as privacy by design or by default.

The planned measures affect companies developing AI products and those that do not develop them but use this type of product, for example, in customer service chats or similar solutions that they can implement in their businesses or companies.

In short, this is an initial serious legislative attempt to regulate the use of AI. We must remain attentive to how this new regulation is being implemented and to the impact, which is difficult to foresee right now, that AI may have on privacy and the protection of personal data.

As always, if you need more information about this artificial intelligence and privacy, don’t hesitate to contact us!

Information on data protection

Company name

LEGAL IT GLOBAL 2017, SLP

Purpose

Providing the service.

Sending the newsletter.

Legal basis

Compliance with the service provision.

Consent.

Recipients

Your data will not be shared with any third party, except service providers with which we have signed a valid service agreement.

Rights

You may access, rectify or delete your data and exercise the rights indicated in our Privacy Policy.

Further information

See the Privacy Policy.